Denied claims are often treated as a series of isolated issues. However, this $20 billion problem stems from deeper causes: fragmented data flows, inconsistent documentation practices, outdated integrations, and a lack of real-time validation. Understanding why denials happen and how to prevent them systematically is critical.

Eight years ago, the cost to rework a single claim was estimated at $25. The financial burden has only increased over time. According to Change Healthcare, denied claims put $4.9M of net patient revenue at risk for an average hospital, with $118 spent on appeals per claim. Reducing preventable denials by even a few percentages can produce a direct annual impact of $2M–$10M+ for organizations processing several hundred thousand claims a year. These savings come not only from recovered revenue but also from reduced labor, lower A/R days, and fewer write-offs.

The article will help you understand how payer behavior, population disparities, and market dynamics shape the denial landscape. It will also offer 8 denial prevention strategies for leaders across product, engineering, revenue cycle, and clinical ops.

Table of contents:

- The $20B denial problem: scale and trends

- Top reasons why insurance claims are denied

- Engineering and product strategies to reduce denials

- 1 Real-time eligibility and coverage verification

- 2 Prior-authorization state machine and lifecycle tracking

- 3 Coding accuracy automation, audits, AI

- 4 Payer-aware validation engines

- 5 Duplicate detection & idempotent submissions

- 6 Timely-filing monitoring & SLA alerting

- 7 API-first integrations and interoperability

- 8 AI-driven denial prediction, risk scoring

- Conclusion

The $20B denial problem: scale and trends

Across marketplace plans, 19% of in-network claims and 37% of out-of-network claims are denied. 41% of providers report that at least one in ten claims is rejected. In some states and payer segments, denial rates vary widely, with certain plans denying anywhere from 1% to 54% of claims.

Variation by payer type

Denial behavior differs substantially across payer categories:

- Marketplace plans tend to exhibit the highest denial rates. In 2023, in-network denial rates ranged from 19% to more than half of all submitted claims. This pattern is often tied to stricter documentation rules, narrow coverage policies, and more aggressive enforcement of prior authorization.

- Commercial payers deny proportionally fewer claims overall. Rates typically fall below 10%. However, they issue a higher share of denials related to prior authorization, coding discrepancies, and medical necessity. Commercial plans rely on automated claim edits, which magnify documentation and coding weaknesses.

- Medicare Advantage plans continue to show elevated denial patterns tied to medical necessity and authorization workflows. In several audits, organizations were found to wrongfully deny services that should have been covered, often due to proprietary algorithms or overly restrictive interpretations of Medicare rules (HHS OIG, 2022).

- Medicaid programs vary widely across states because they operate under different benefit structures, reimbursement policies, and administrative requirements. State-level studies show denial rates ranging from 8% to more than 30%, depending on the maturity of state systems and documentation standards.

These variations highlight why RCM systems must be configurable, data-driven, and capable of adjusting to payer-specific rules instead of relying on static workflows.

Socioeconomic disparities

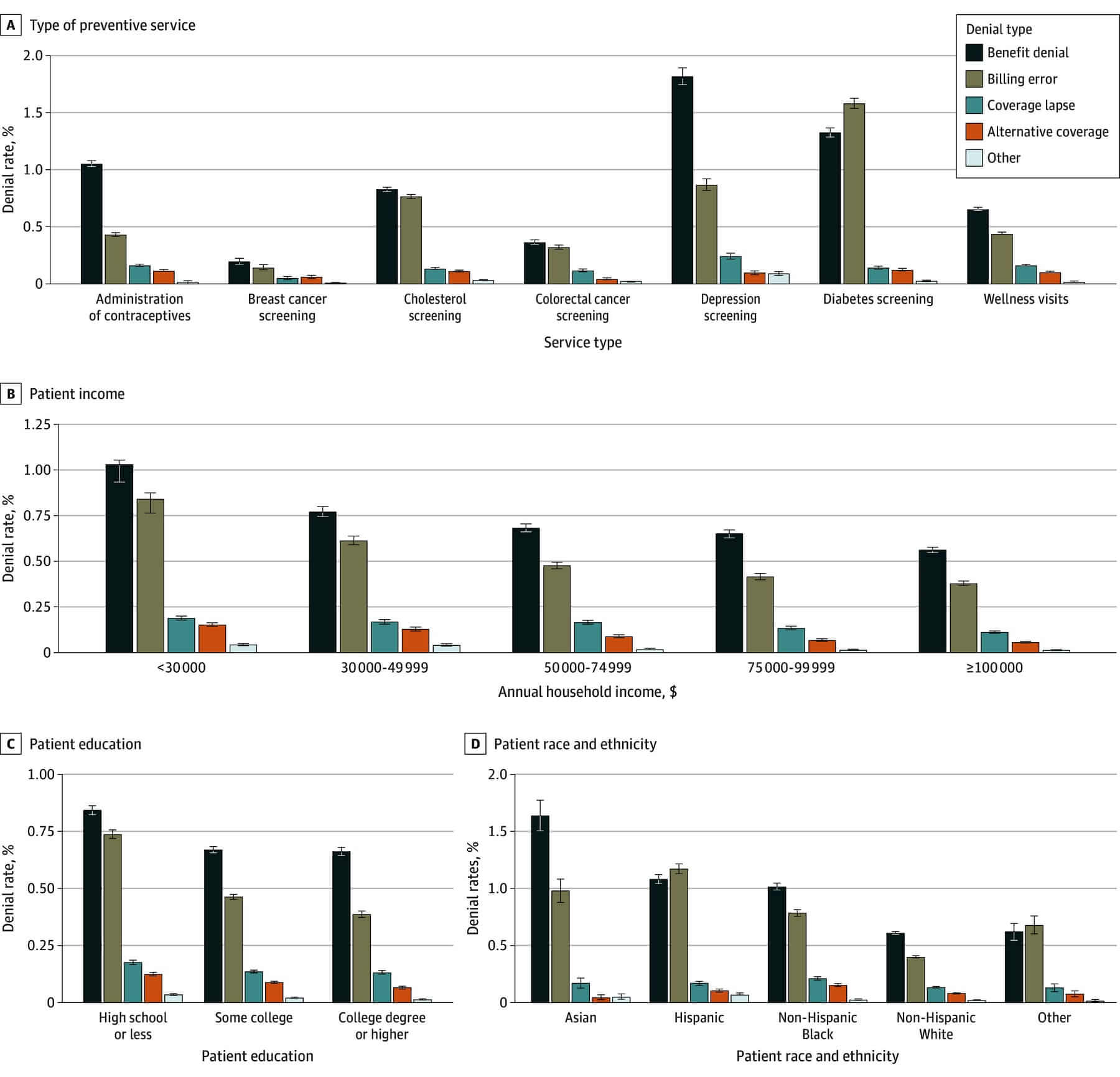

Denials affect lower-income households and historically marginalized groups at a disproportionate rate. A comprehensive US dataset spanning 2017–2020 reveals that:

- Patients from low-income households are 43% more likely to experience denials.

- Denial rates are much higher for non-Hispanic Black (2.04%), Hispanic (2.44%), and Asian patients (2.72%) than non-Hispanic White patients (1.13%).

A 2025 follow-up study found that low-income and minority patients were less likely to have their denials contested. Even after appeal, they received lower average cost-sharing reductions than higher-income/white patients. These biases highlight that denial-rate reduction is also a matter of equity, compliance, and reputational risk. They reinforce why reliable, automated data-capture and validation tools must be part of any next-generation RCM architecture.

Image source: PubMed Central

Market trends

The denial-management market is expanding rapidly, growing from $5.13B in 2024 to $8.93B by 2030. This growth is driven by:

- Rising denial volumes.

- Increasing administrative burden.

- The shift toward automation, AI, and integrated RCM systems.

- Provider pressure to reduce A/R and improve revenue predictability.

The market is also undergoing qualitative change. Historically, vendors focused on downstream workflows (appeals, resubmissions, and follow-up). Newer approaches emphasize denial prevention. Real-time eligibility, automated prior authorization workflows, payer-aware validation engines, and AI-driven risk scoring become embedded earlier in the claim lifecycle.

Market consolidation is another key trend. Traditional RCM outsourcers are acquiring or partnering with analytics and AI vendors. Clearinghouses are offering more built-in rules engines and dashboards. Core EHR and PMS vendors are expanding their native denial-management features.

Reasons why insurance claims are denied

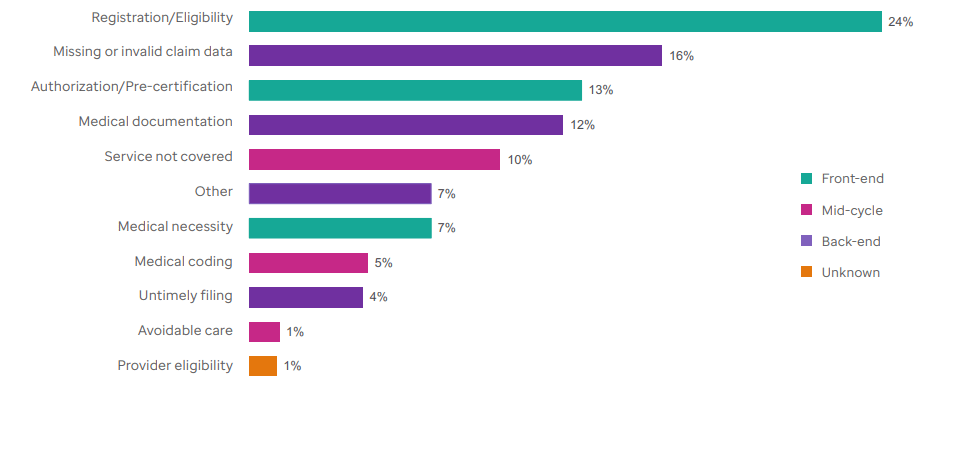

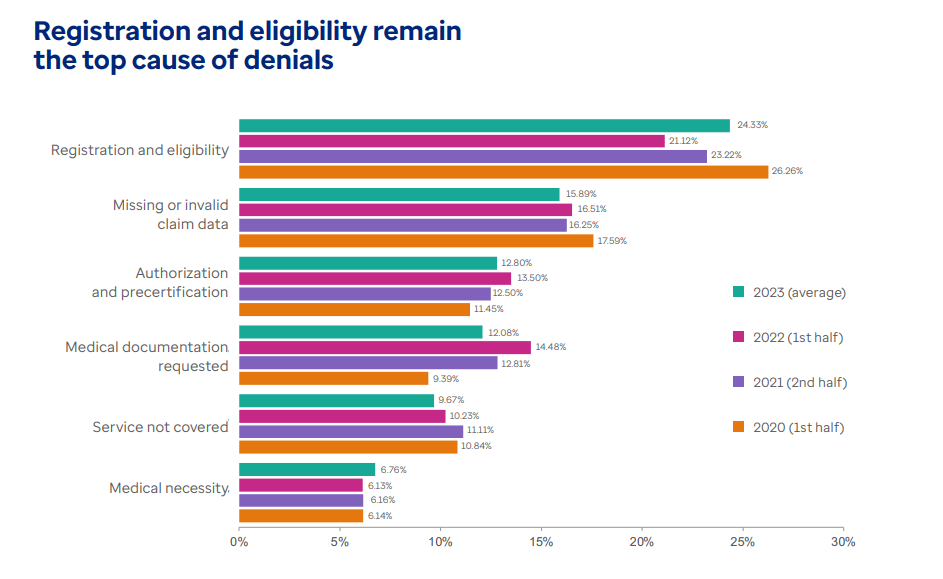

# 1 Eligibility and registration

Eligibility and registration issues have historically accounted for almost 25% of all denied claims. These occur when information captured during scheduling or registration is incomplete or inaccurate.

A common example is a returning patient whose coverage changes at the start of a new plan year. A rejection is likely if the staff relies on the previous visit’s insurance card rather than re-verifying eligibility. Another example appears in multi-payer households. If the coordination of benefits (COB) order isn’t updated and the wrong payer is billed first, the primary payer denies the claim. This forces the provider into a long, unnecessary appeals loop. Technically, these failures stem from:

- Fragmented registration systems.

- Lack of real-time eligibility.

- Workflows that depend on staff intervention instead of system safeguards.

- Weak integration with clearinghouses or payers.

Organizations that do not run automated eligibility checks at scheduling, pre-visit, and day-of-service are especially vulnerable.

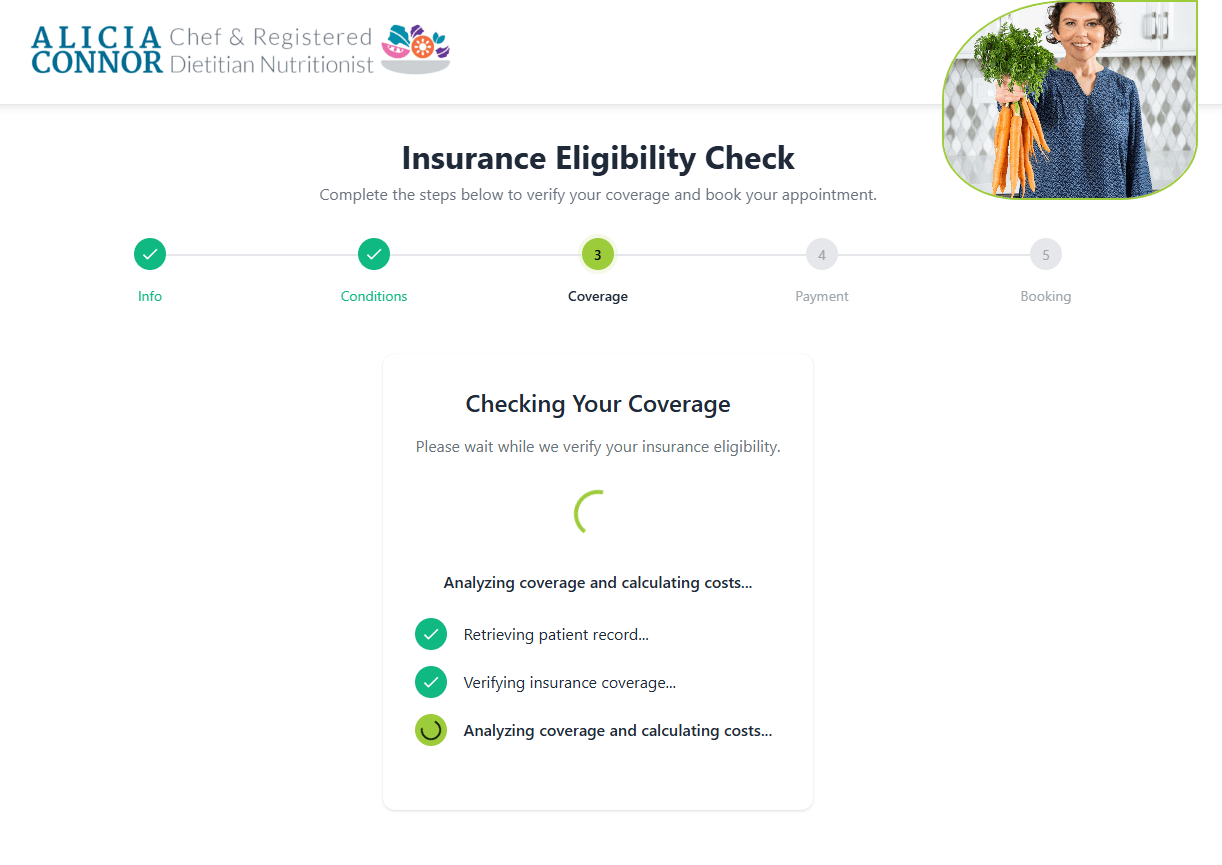

Automated eligibility verification in a custom EHR solution developed by MindK

# 2 Missing or invalid claim data

According to Change Healthcare, this category accounts for up to 16% of all denials across 124 million claims. Such denials are common when:

- Essential elements of a claim, such as NPIs, taxonomy codes, plan IDs, or service location codes, are absent or incompatible with payer requirements.

- Demographic fields are incomplete or inconsistent across systems.

- Claim metadata (e.g., place of service, service dates, or diagnosis-procedure combinations) fails validation rules applied by clearinghouses or payers.

Many of these issues indicate a non-optimal RCM architecture. Outdated configuration tables, disparate versions of patient or provider data across EHR and PMS systems, or interfaces that allow unvalidated free-text entry all contribute to this type of denial.

# 3 Authorization/referral lapses

Prior authorization (PA) errors account for up to 12% of denials. Most of them stem from avoidable process failures: authorizations recorded in spreadsheets instead of structured systems, outdated codes that no longer match what the payer approved, or approvals that are never linked to the documented encounter.

Take, for instance, a patient waiting for an MRI of the lumbar spine. The clinic obtains prior authorization for CPT 72148 (MRI lumbar spine without contrast). On the day of the visit, the physician determines that contrast is necessary and performs CPT 72158 (MRI lumbar spine with and without contrast). Because the authorization was never updated to reflect the new procedure, the claim is submitted with a non-authorized CPT code.

Without a formal PA workflow, the staff has no way to automatically detect such mismatches. When authorization data lives outside core systems, engineering teams cannot enforce validation rules or surface risks to clinicians or billers. As a result, preventable denials accumulate, creating unnecessary rework and delaying reimbursement.

# 4 Coding and documentation errors

Unspecified diagnoses, incorrect modifiers, and insufficient documentation figure in more than 10% of claim denials.

These errors typically occur when documentation does not fully support the billed service. In many organizations, coding workflows are disconnected from clinical documentation, making it difficult to resolve ambiguities before claims are submitted. Clinical systems may also fail to capture required details such as laterality, dosage, or encounter complexity.

Such denials arise from a combination of outdated coding tools and the lack of automated claim-scrubbing. Without in-documentation guidance, coders must rely on manual interpretation, leading to errors and rework.

# 5 Duplicate or conflicting claims

These denials often result from mismatched claim statuses across different systems. One platform marks the claim as “not sent,” another tags it as “in process,” and a third one cannot confirm receipt. This can trigger automated or manual resubmission.

Another common cause is retry logic without idempotency. If a clearinghouse endpoint times out or returns an ambiguous response, the PMS may resend the claim without verifying the first attempt’s success. Over time, this leads to multiple submissions for the same patient, same date of service, and same CPT/diagnosis combination.

Insufficient lifecycle visibility is almost always the root cause of such errors. Without a single source of truth or deterministic events, providers cannot reliably distinguish a stuck claim from one that simply hasn’t updated yet.

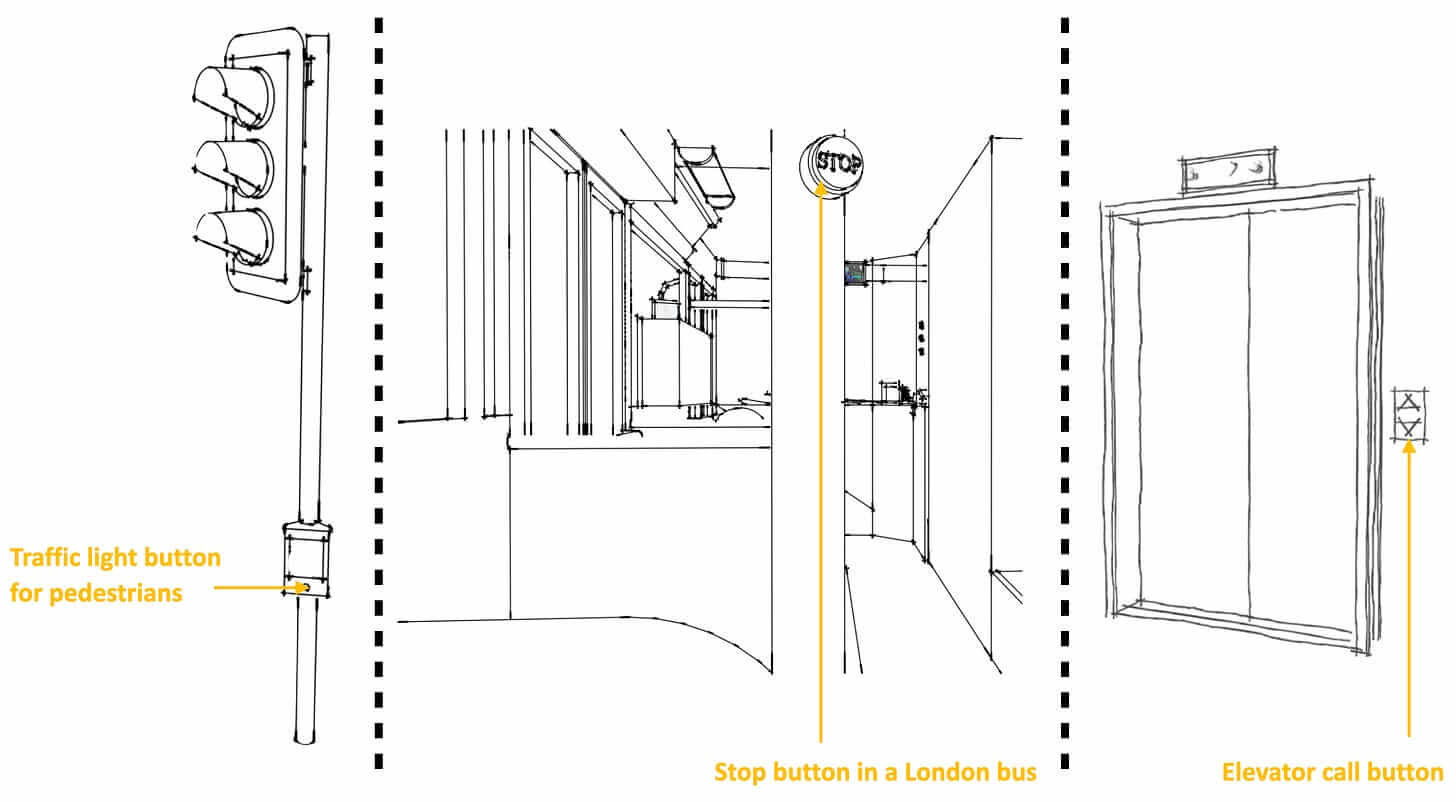

Three examples of idempotency in the real world. The outcome is always the same, no matter how many times you press the elevator button.

Image source: FreeCodingCamp

# 6 Timely filing denials

Timely filing rules vary from 30 days to over a year, depending on the payer. Missing these deadlines indicates operational clinical issues. Claims may sit in internal queues waiting for coding or documentation updates. Electronic acknowledgments (999/277CA files) may fail to post, leaving staff unaware that a claim was never accepted by the clearinghouse. An outage may cause a batch submission to miss its deadline. In organizations without real-time submission monitoring, these failures compound quickly.

# 7 Bundling and medical necessity disputes

Sometimes, multiple procedures performed during the same encounter are billed separately despite payer rules requiring them to be grouped. This results in bundling-related denials.

Medical necessity denials frequently involve imaging studies, surgical interventions, and chronic care encounters where payers expect documentation of conservative treatment attempts, diagnostic findings, or specific symptoms before approving advanced services.

These denials highlight two systemic issues: clinical documentation that does not reflect payer policies and billing systems that fail to apply bundling logic before submission. Both are preventable, which we’ll detail in the next section of the article.

Engineering & product strategies to reduce denials

We’ve previously described some of these strategies in our article on revenue cycle optimization. Now, let’s focus on denial management and prevention.

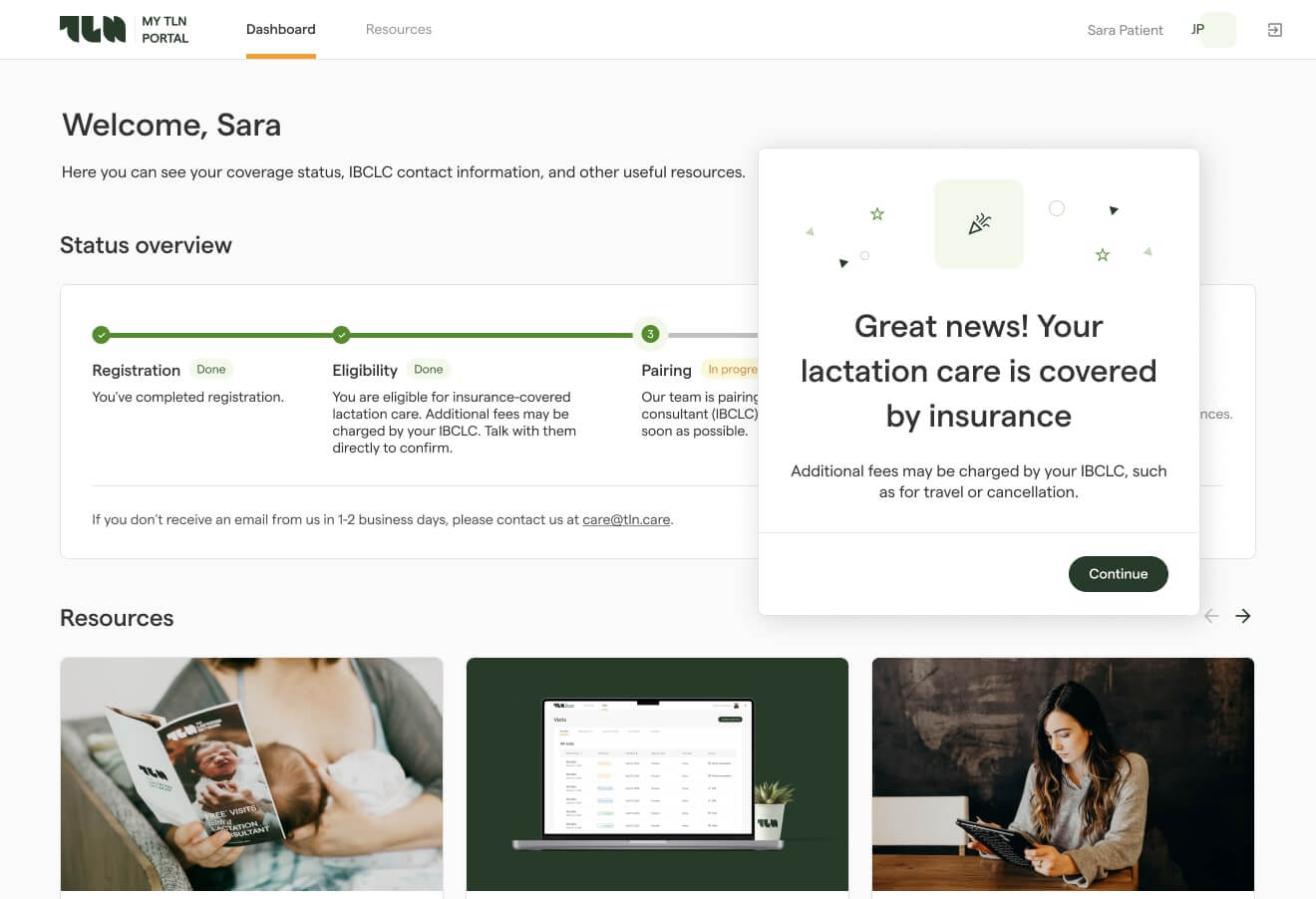

1 Real-time eligibility & coverage verification

Eligibility verification must be fully automated and embedded into both scheduling and pre-visit workflows. From the engineering standpoint, this requires:

- Integrations with clearinghouse APIs or payer-specific endpoints supporting EDI 270/271 or emerging FHIR R4 Eligibility resources. These integrations must be reliable, idempotent, and capable of handling high-volume queries across multiple payer networks.

- Eligibility checks triggers at multiple points (at scheduling, 48–72 hours before the visit; on the day of service for high-cost encounters).

- Caching with time-to-live (TTL) logic for stable responses and batch verification jobs for predictable, high-volume schedules.

- Graceful fallback to clearinghouse batch files or delayed verification pathways for payers without real-time APIs.

To minimize human error, the eligibility engine must display results within clinical and billing interfaces, not in isolated back-office queues. Staff should see clear indicators of coverage status along with the root causes of potential denials. Every check must be logged with raw response payloads, timestamps, and payer trace numbers for audits and dispute resolution.

Comprehensive eligibility verification platform developed by MindK

2 Prior-authorization state machine & lifecycle tracking

A reliable prior auth requires translating what is often a manual, fragmented workflow into a structured, deterministic system that reduces preventable denials. The core of this strategy is designing a PA lifecycle that mirrors how payers evaluate requests. Another requirement is to give providers real-time visibility into each step. A well-implemented PA system includes:

- Formal state machine where each authorization moves through clear, mutually exclusive states (Draft, Submitted, Pending Info, Acknowledged, In Review, Approved, Denied, Expiring, and Closed).

- Event-driven transitions between the states must be driven by deterministic events: EDI 278 responses from payer APIs, portal updates collected via RPA or human entry, document uploads, or automated timeouts.

- Tight integration with scheduling, clinical documentation, and claim generation workflows.

The PA module should automatically determine whether authorization is required based on payer rules, CPT/HCPCS codes, diagnosis codes, and service location. If needed, the system should create a PA record in a Draft state, pre-populate it with available clinical and patient information, and surface it in the work queue of the appropriate team.

Post-submission, the system must continuously reconcile payer responses with internal state transitions so that authorized services are always linked to the correct CPT/HCPCS codes.

3 Coding accuracy automation, audits & AI

Engineering teams can support coding quality with multilayer automation. Scrubbing must run as early as possible, ideally during documentation and code selection. This allows clinicians and coders to correct issues before they cascade into denials.

It’s possible to prevent documentation-related denials by integrating the coding automation directly into your EHR clinical documentation tools. When a clinician documents a procedure, the system will highlight missing required elements based on the expected coding set. NLP models can support this by scanning clinical notes in real time and prompting staff to fill in gaps before coding begins.

Beyond front-end checks, accurate coding also requires retrospective auditing pipelines. For example, a particular provider consistently bills a higher frequency of level-4 E/M visits compared to peers under similar patient complexity. A properly trained AI model will flag this as an outlier. The system would then generate structured audit tasks and assign them to human coders.

This combination of real-time guidance, automated detection, and audits helps to prevent coding and documentation-related denials.

4 Payer-aware validation engines

The goal is to evaluate a claim before it becomes an 837 file. A validation engine requires you to move from simple field checks toward a multi-layer, rules-driven system.

The first layer is a modular rules framework. Each rule must be version-controlled, independently testable, and hot-reloadable. This way, any payer/CMS/clearinghouse changes do not require code deployments. Rules should cover:

- Formatting constraints.

- CPT/ICD compatibility.

- Modifier logic.

- Facility and place-of-service requirements.

- Coverage restrictions.

- Taxonomy/NPI validation.

- Contract-specific exceptions.

To ensure maintainability, store rules as configuration rather than hard-coded logic, and expose validation results via APIs and workflow queues. Comprehensive logging and observability are essential to track rule hits, error categories, and false positives.

The next layer includes validation of schema (X12 837, 270/271, 999/277CA, or FHIR resources). The system then has to evaluate coding logic using updated CCI and MUE rules. It’s also necessary to link eligibility metadata and authorization records to ensure codes align with coverage and prior auth requirements. For high-risk services, the engine may require supplemental documentation checks.

When implemented properly, the validation engine dramatically reduces downstream denials.

5 Duplicate detection & idempotent submissions

Duplicate denials almost always indicate architectural gaps in the submission pipeline.

The same claim should never be treated as a new claim unless its underlying data changes. This strict idempotency requires the generation of claim IDs based on stable attributes, such as patient, date of service, rendering provider, place of service, and CPT/HCPCS codes. These IDs must be computed before the 837 file creation to provide consistent keys for downstream services. Hashing full claim bodies adds another layer of protection.

Retries should go through idempotent APIs or job processors that simply update the status of an existing claim. Distributed architectures require additional safeguards:

- FIFO queues with deduplication (e.g., Amazon SQS FIFO).

- Record-locking to avoid parallel submissions.

- Reconciliation logic to merge out-of-order or partial acknowledgments (999, 277CA, 835).

If clearinghouse acknowledgments don’t arrive within SLAs, the system should trigger a validation workflow, not an automatic resubmission.

6 Timely-filing monitoring & SLA alerting

Timely-filing denials are entirely avoidable with the right architecture. Payer-specific SLA engines can evaluate every claim’s deadline based on date of service, payer contract rules, claim type, and any extended windows allowed for corrected claims. This SLA engine must operate as part of the real-time claims pipeline.

Effective monitoring begins by capturing timestamps at each event. Claim creation, 837 generation, clearinghouse receipt, payer acceptance, and any resubmission attempts should all get granular timestamps.

You’ll always know whether the payer considers a claim “received” if the system ingests raw 999/277CA acknowledgments. Claims approaching expiration should automatically escalate into priority work queues. If the payer allows corrected claims within a shorter secondary window, the engine can route eligible claims into corrective workflows before time runs out.

7 API-first integrations and interoperability

Modern RCM requires event-driven communication between clinical, billing, and payer systems. Implementing this strategy begins by replacing brittle, point-to-point interfaces with a unified claims pipeline. It must normalize data from the EHR, PMS, and ancillary systems into a common model before generating 837 files or FHIR claim resources.

Integrations with clearinghouses should support both batch and API-based submission pathways, real-time status inquiries, and ingestion of raw EDI files. Consistent IDs allow tracing claim lifecycle events from encounter creation to payment. Version-controlled transformation logic ensures stable 837 generation and consistent mapping of clinical concepts into billing constructs.

Payers offering modern APIs are suitable for direct connections (prior authorization, eligibility, claim status, documentation uploads, and appeals). Event-driven architecture with message buses or streaming platforms makes it possible for updates to propagate reliably whenever you get new information.

Observability is non-negotiable. Systems must include distributed tracing (OpenTelemetry), structured logging, correlated identifiers, metrics dashboards, and lineage tracking so teams can troubleshoot denials and identify systemic issues quickly.

8 AI-driven denial prediction & risk scoring

AI/ML models can analyze historical claims, payer responses, documentation patterns, and patient demographics to assign real-time denial risk scores. These scores should appear in billing and coding interfaces, prompting staff to resolve issues before submission. Models can be trained on features such as CPT/ICD combinations, payer behavior, service location, provider patterns, missing documentation indicators, and prior outcomes.

Engineers should implement continuous-learning pipelines: after each 835 or denial event, feedback loops retrain or fine-tune models. LLMs can summarize payer policies, surface required documentation elements, and even draft appeal letters. The system must include governance for model accuracy, drift detection, explainability, and privacy controls.

Conclusion

Claim denials are a structural drain on revenue, operations, and even equity. Behind every preventable denial is a breakdown in data integrity, integrations, or workflows. Most of these issues are preventable long before any appeal or write-off.

For those who are serious about financial health, denial prevention should be treated as a core architectural requirement. Payer-aware validation engines, structured prior-authorization workflows, deterministic claim pipelines, and real-time monitoring all work towards a single goal.