There’s one thing that stops Single-Page Applications (SPA) from dominating all other kinds of apps. They can take forever to launch. This is a huge problem as even a one-second delay can cost you 7% of conversions.

But just like you can make your single-page app SEO-friendly, you can improve its performance.

So here’s our detailed guide for those who want to speed up an SPA application in 2020. For those who are new to the SPA concept, I’d recommend starting with our article on what is a Single Page Application and how they compare to traditional multi-page apps.

Table of contents:

- SPA monitoring

- Lazy rendering

- Lazy data fetching

- Static content caching

- WebSockets

- Bypassing the same-origin policy

- Content Delivery Networks (CDN)

Monitor your SPA performance

There are a lot of tools that can help you monitor SPA performance. First, you can use the Chrome Devtools extensions such as Lighthouse, Ember Inspector or React Performance Devtools.

The problem with such tools is that they don’t reflect the perceived loading speed of your SPA (which is the only thing that matters to users).

There is a whole other subset of tools like Speed Index that try to match the actual user experience.

But real users come from a wide range of devices/networks and it’s hard to account for all of them in a synthetic testing environment.

We advise you to use Real User Monitoring (RUM). It passively tracks every interaction that people have with your application giving you an accurate real-time picture of how users perceive its loading speed.

If you are a fan of ready-made solutions, here is a short list of RUM tools that support single-page applications:

- Dynatrace

- Catchpoint

- Akamai mPulse (former Soasta)

- Appdynamics

- Raygun Real User Monitoring

- Sematext Experience

- New Relic Browser

You can also ask your development team to apply RUM to SPA.

It has to identify both the beginning of the successive navigation (AKA soft/in-page navigations: after the app has launched, a user clicks on links/buttons leading to new “pages” inside your SPA) and the moment the page is fully loaded. There are couple ways developers can implement this:

- Using a Resource Timing API to identify when an AJAX call has been made to pinpoint the beginning of an in-page navigation;

- Use a Mutation Observer to detect modifications to DOM and identify the end of network activity via a Resource Timing API.

Practice has shown these methods can be unreliable and provide imprecise results. For one, your SPA might make AJAX calls to pre-fetch some data even when users don’t start an in-page navigation. Or the results may get skewed by network activity that doesn’t affect what’s happening on screen.

To handle this they can use a simple API to measure the loading times:

var rumObj = new RumTracking({

'web-ui-framework': 'EMBER'

});

// App Launch - window.performance.timing.navigationStart is the start marker

rumObj.setPageKey('feed_page_key');

// Do rendering

rumObj.appRenderComplete();

// Successive navigation

rumObj.appTransitionStart();

rumObj.setPageKey('profile_page_key');

// Do rendering

rumObj.appRenderComplete();With this method, every page would have to write the instrumentation code.

Many single page application JavaScript frameworks have lifecycle hooks that can be used to automate the instrumentation.

// Add instrumentation for successive navigation start

router.on('willTransition', () => {

a. rumObj.appTransitionStart();

});

// Add instrumentation for rendering is complete

router.on('didTransition', () => {

Ember.run.scheduleOnce('afterRender', () => {

a. rumObj.appRenderComplete();

});

});Thus, developers could use navigation start from Navigation Timing API to detect the beginning of the initial navigation. The router’s willTransition event would signalize the beginning of an in-page navigation.

By listening to еру didTransition event and adding work in the afterRender queue, you’ll know when the page was fully loaded in both modes.

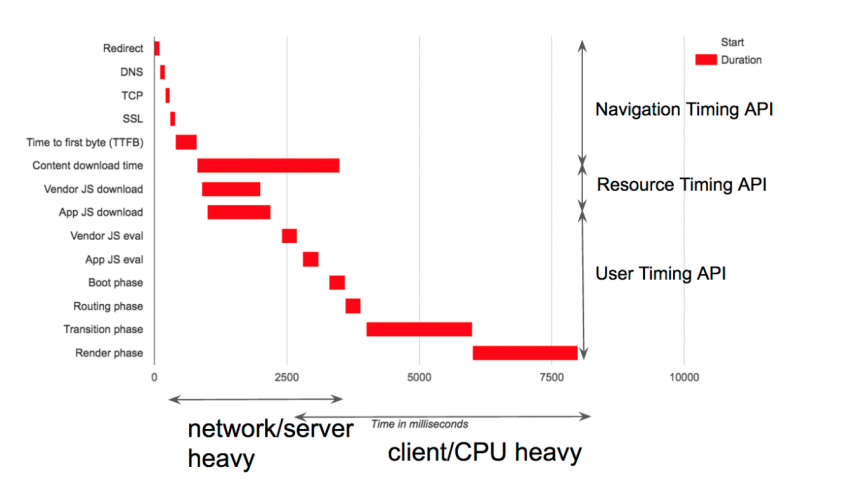

Now you’ve got everything you need to measure loading times. To discover performance bottlenecks, you’ll need to break down your RUM data. You can detect the loading times for individual resources via User Timing API.

How a performance waterfall might look for a typical SPA. Source: LinkedIn

Now it’s time to analyze the RUM data and see what you can do about your bottlenecks.

Top 6 ways to improve the SPA performance

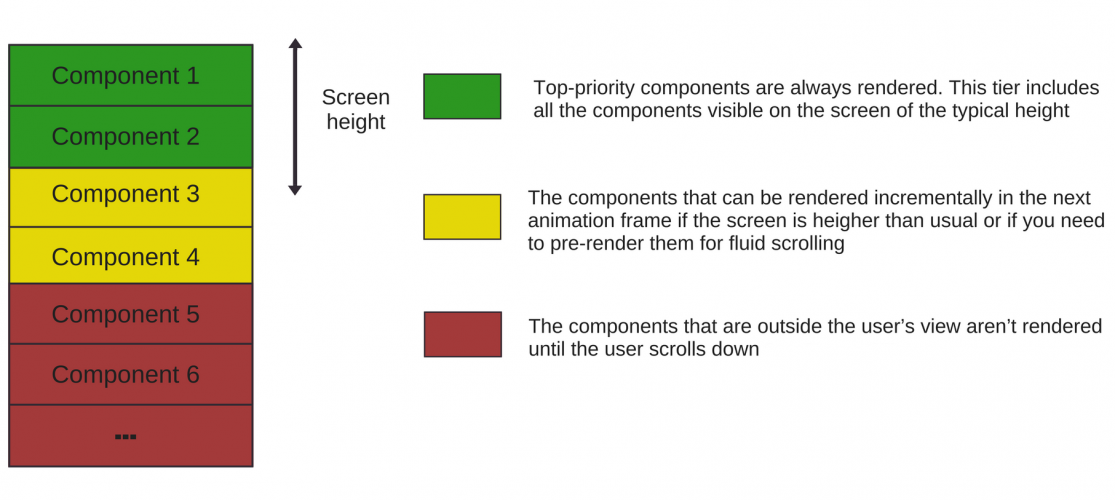

1. Lazily render below-the-fold content

Simply put, this means rendering only those parts of the page that are visible to the user at the moment (i.e. above-the-fold content).

Lazy rendering is useful if your SPA spends a lot of time in the render phase (see the picture above). At this stage, the app creates the DOM (the specification of how text, images, and other objects are represented on the page) for all the components on the page.

Once the DOM is built for above-the-fold components, you can yield the browser’s main thread. This would speed up the SPA launch as you won’t have to render the resources you currently don’t need.

Another performance boost may come from assigning the rendering priorities to all components on the page (so the browser won’t render all simultaneously).

This way you could speed up the First Meaningful Paint (i.e. the time when the user sees the page’s core content). By not rendering some of the non-visible components, you’ll also improve Time To Interactive.

2. Use lazy data fetching

After speeding up the rendering phase, you might want to look into the transition phase.

At this time, the SPA loads the data, normalizes it and pushes it to the store. To decrease the time spent in this phase, you’ll need to cut the amount of data handled by the SPA.

Just as you can lazily render below-the-fold content, you can defer loading the data until you really need it.

You can use one high-level call to fetch the data you require for the First Meaningful Paint and another one to lazily load the rest of the data you require for the page.

Note: the aforementioned methods work both for the start-up mode and the in-page navigations because they decrease front-end time.

Some SPA frameworks, such as React, Angular or Vue, allow developers to split the application code into several bundles. You can load them simultaneously or when necessary. The second option can speed up the first navigation. You can, for example, load only the parts that are immediately accessible to users and defer everything else (e.g. the parts that require authorization).

3. Cache the static content

Investigate your SPA to figure out when you can store images and other static resources on the user’s device.

Pulling data from memory or Web Storage takes a lot less time than sending HTTP requests, even with the best servers.

Device memory is much faster than the fastest network. So caching is your best friend.

For massive collections, you can use some kind of pagination and rely on the server for persistence, or write an LRU algorithm to erase the spare items from the storage.

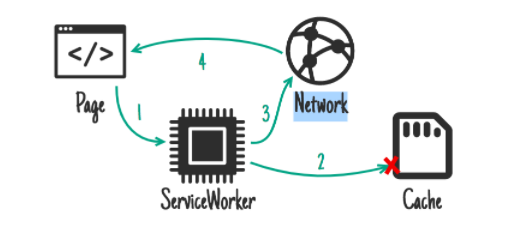

You can use Service Workers for caching static content in SPAs.

They’re client-side scripts running in the background. You can use them to decrease the traffic and enable offline functionality. When the browser makes a request for content, it first goes through a service worker. If the requested content is present in the cache, the service worker would retrieve it and display on screen. In other cases, it would request the resource from the network.

You can also use IndexedDB API to cache large amounts of structured data.

Source: hackernoon

4. Use WebSockets where appropriate (i.e. for highly interactive apps)

WebSocket is a protocol that allows bidirectional communication between the user’s browser and the server. Unlike with HTTP, the client doesn’t have to constantly send requests to the server to get new messages. Instead, the browser simply listens to the server and receive the message when it’s ready.

As the result, you get a connection that in some cases can be 400% faster than the regular HTTP.

You can use services such as socket.io to implement WebSockets for your SPA.

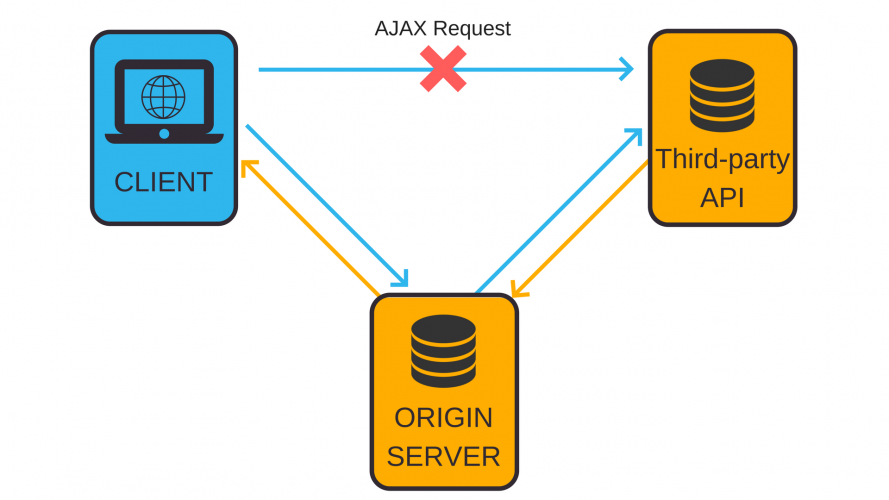

5. Employ JSONP/CORS to bypass the same-origin policy

A lot of apps depend on third-party APIs for some of their features.

But due to the same-origin policy, you can’t make AJAX calls to the pages your browser perceives as located on another server. To gain access to a third-party API, the app will have to use an origin server as a proxy.

The extra roundtrip means more latency.

You can request the resource directly if you don’t process the retrieved data and don’t store it in your system. For this you can use either JSON with Padding (JSONP) or Cross-Origin Resource Sharing (CORS).

- JSON with Padding

JSONP leverages the fact that browsers allow you to add the <script> tags coming from other domains. With a JSONP request, you dynamically construct these tags, passing the URL parameters to the necessary resources.

The tag then returns the resource in a JSON response.

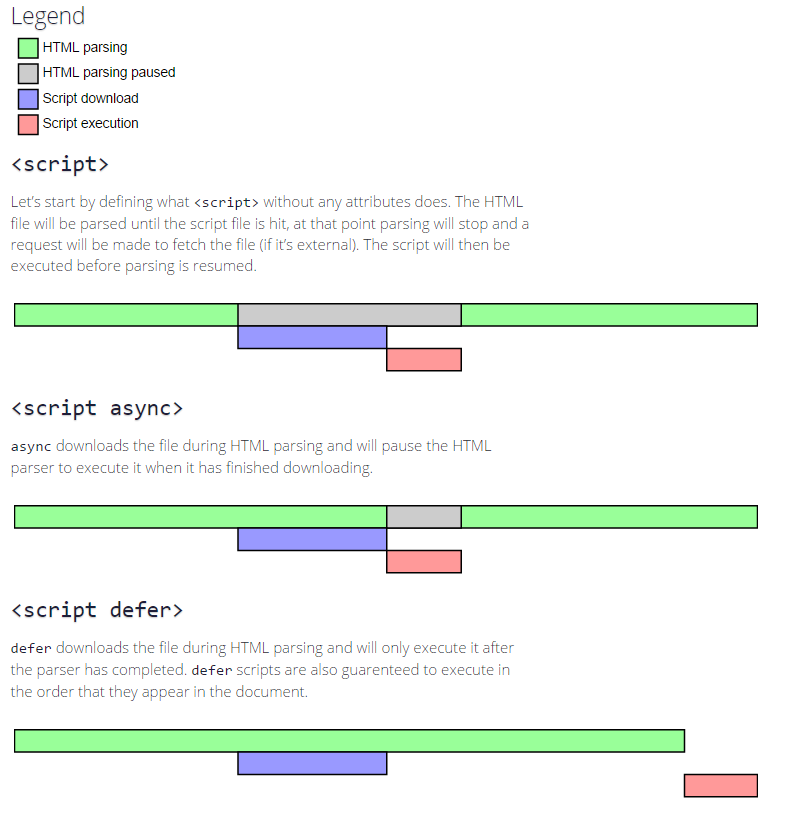

But there is one downside. As you use <script> tags, JSONP only works for <GET> requests. You can further improve the performance by adding the async and defer attributes to the external scripts. Without these attributes, the browser would have to download and execute the script before it can display the rest of the page.

This slows the perceived loading speed.

If you include the async attribute, the browser wouldn’t stop parsing the page while it loads the script, but it would still pause the parsing while executing the script. The defer attribute, on the other hand, delays the script execution until the page is parsed completely.

Source:growingwiththeweb.com

- Cross-Origin Resource Sharing

CORS allows you to define who and in what ways can access the content on your server.

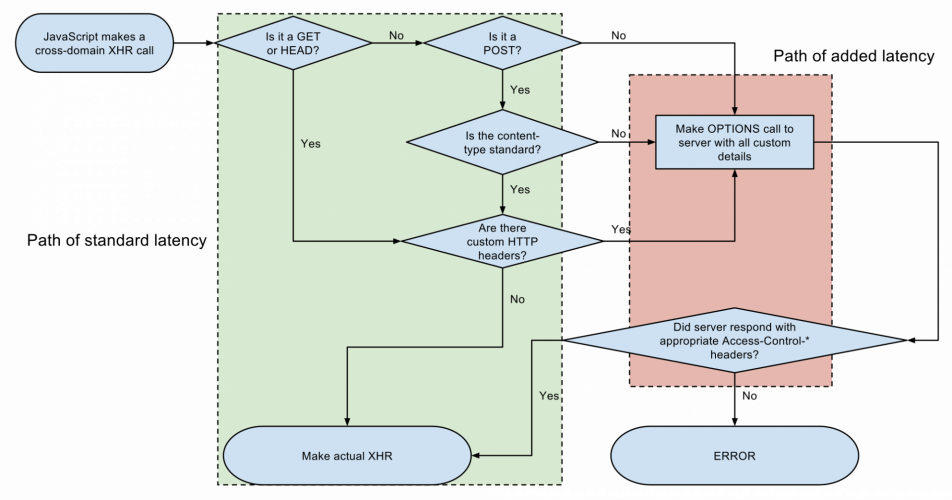

But there is an issue. The requests that use any method besides GET, HEAD, and POST initiate a preflight check to confirm the server is ready for cross-origin requests.

To run the check, the client sends another request describing the origin, method, and headers of the cross-origin AJAX call. Based on this information, the server decides whether to handle the call. After receiving the response, the client initiates the request for a third-party resource.

As preflight checks add a second roundtrip, they can effectively double your latency.

How preflight checks can

You can use one of the following methods to deal with preflight requests:

1. Write your APIs and serve your content using only HEAD, GET, POST, Accept, Accept-Language, Content-Language, and Content-Type as they don’t initiate preflight requests.

The Accept header gives you the ability to define the acceptable types of content. The preferred type by default is text/html, but you can make application/json or any other type of content as the sole acceptable. Your backend will then check the Accept header and choose to send either HTML, JSON, or some other response.

2. Cache the preflight responses to decrease the subsequent checks.

In this case, you can’t rely on the usual Cache-Control header to define the caching policies. But you can use the new Access-Control-Max-Age header instead. Its numeric value defines how many seconds it would take to cache the response.

6. Use a content delivery network (CDN)

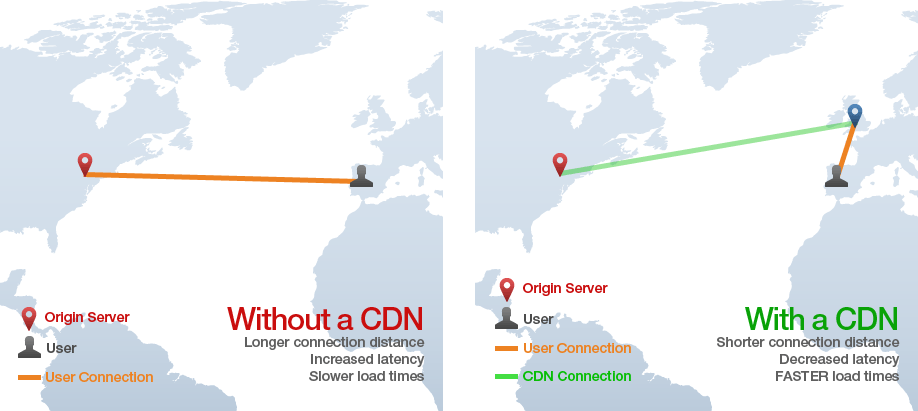

CDN is a network of servers located all over the world. You can use a CDN to deliver static resources like images in a more efficient way. Each server in the network contains the cached version of the content hosted on the origin server.

If a user from Melbourne requests an image, the network won’t fetch it from the origin server located in New York. A CDN would use the Australian server (or the alternative with the least latency) to serve the cached content.

Using CDNs for Single Page Applications means faster loading of scripts and decreased time-to-interactive. Increased security is a nice bonus.

While larger corporations often have their own CDNs, it’s more reasonable to use a service provider such as Amazon CloudFront or Cloudflare.

Source: gtmetrix

Concluding note

These are our top 6 ways to speed up a single-page application.

And here’s a bonus one: measure, optimize, repeat (but only when needed).

This advice applies to any application as optimization is a continuous process.

Every change you make to the code can affect the page load speed. So gauge how your SPA behaves and avoid premature optimization where developers spend enormous amounts of time tweaking nonessential parts of their apps.

And if you need a team of experts to boost your SPA performance or build a brand-new application, you can always drop us a line.