Customers won’t churn because of ugly UI. They’ll churn because your software can’t get them paid. MedTech products are now expected to handle not just clinical, but also financial workflows. Poor revenue cycle functionality leads to billing errors, compliance risks, and poor customer satisfaction.

Over the past few years, I worked as a Lead Business Analyst on several projects focused on healthcare revenue cycle optimization, both inside existing EHR/PM platforms and standalone RCM automation tools. In my experience, the top performers in the niche exhibit similar behavior. They treat the revenue cycle as a productized pipeline with owned rules, controlled schemas, and traceable events.

Table of contents:

- Treat RCM as a product

- Establish a solid baseline for optimizing

- Build where it matters, buy the rest

- Use event-driven architecture for reliability and auditability

- Prevent denials pre-visit by shifting checks left

- Capture clean, codable data at the source

- Turn payer rules into code to scrub and submit claims

- Eliminate denials at the root, not by firefighting

- Choose what to automate with ML/AI vs. rules

- Accelerate patient-pay with smart UX

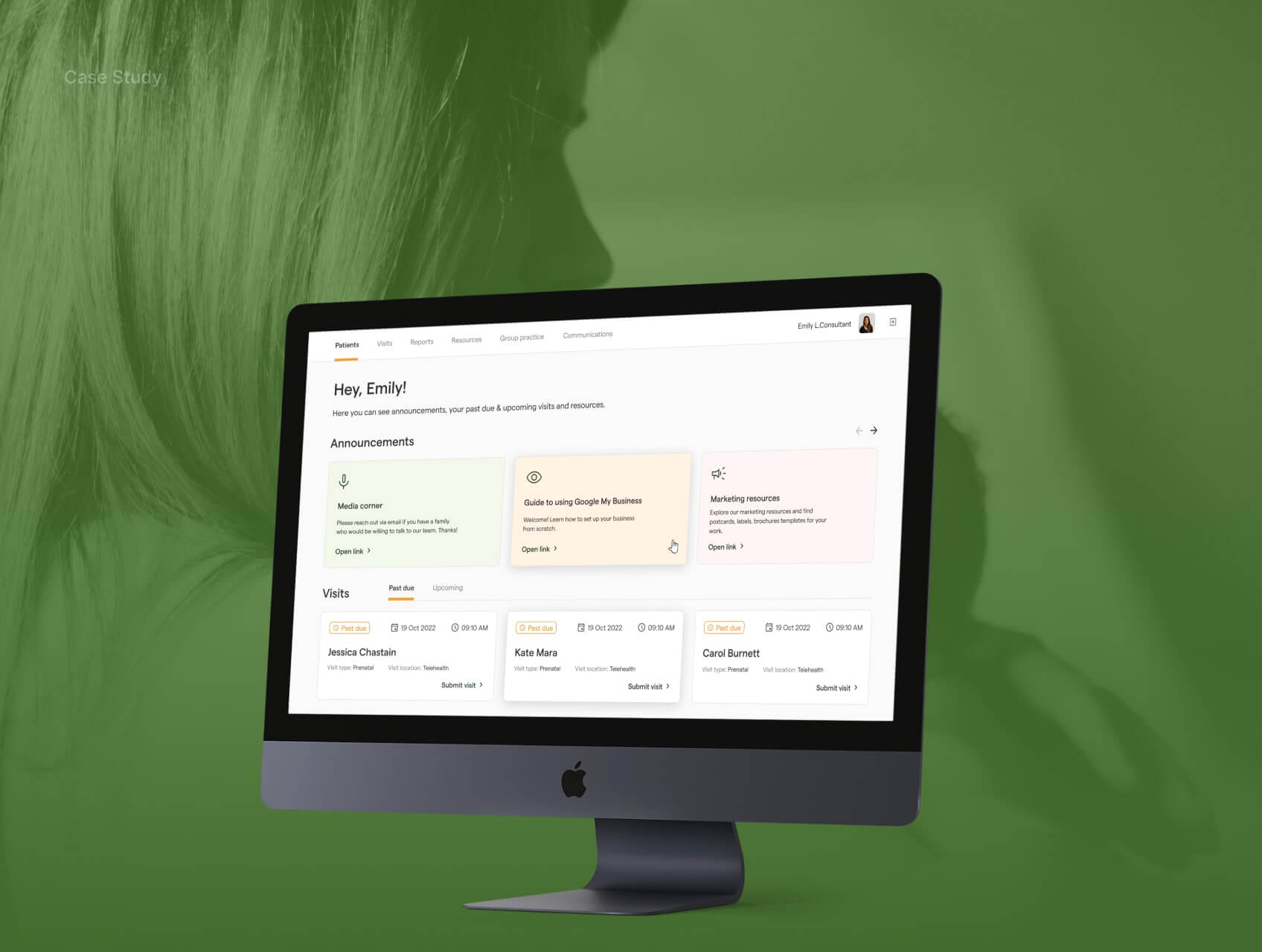

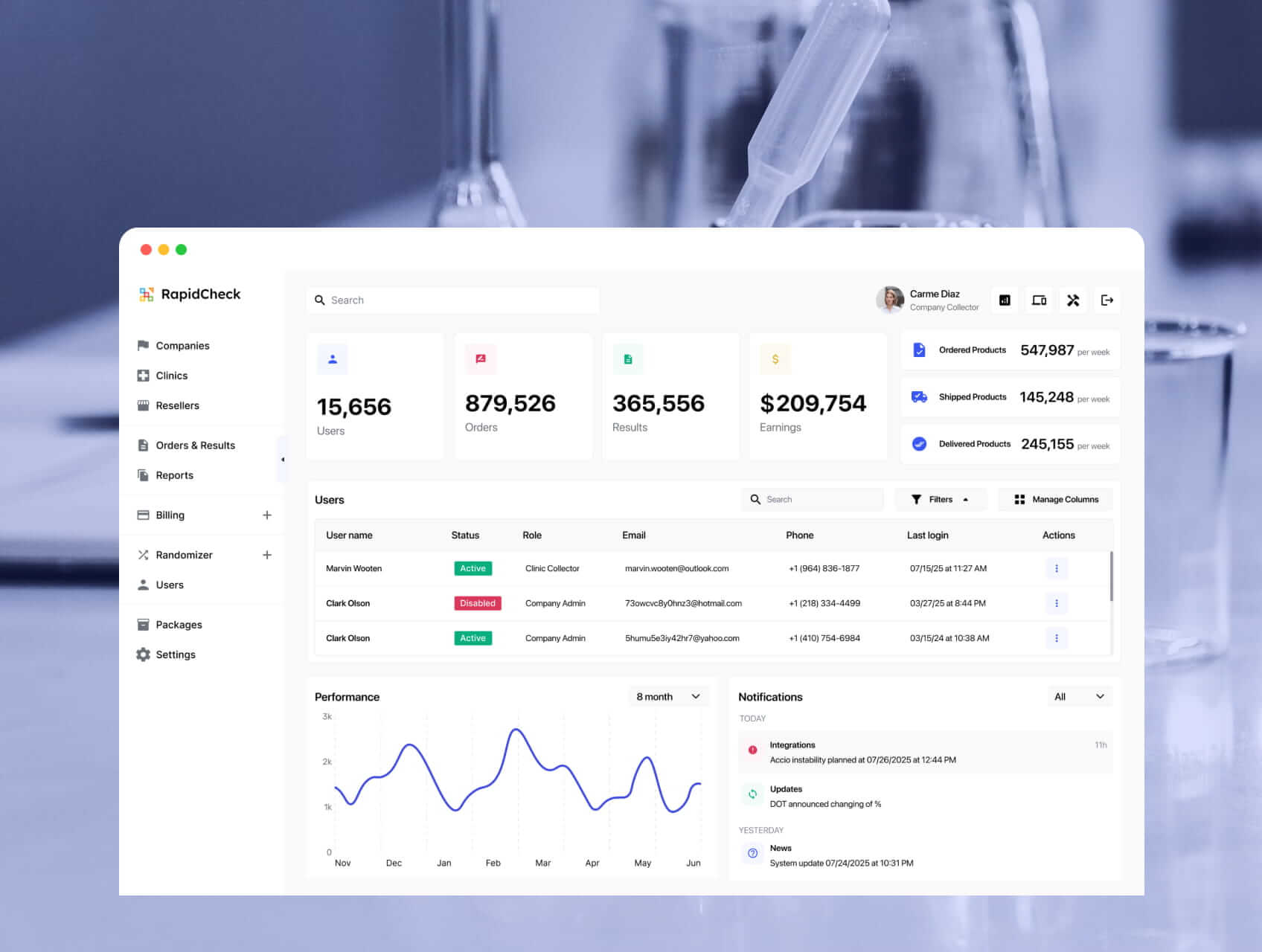

A specialty EMR system with a built-n RCM module developed by MindK

Treat RCM as a product with a single source of truth

RCM spans four phases: pre-visit (eligibility/benefits and prior authorization), time-of-service (codable documentation capture and charges), post-visit (837 claim scrubbing and submission, 277 tracking, 835 remittances, denial resolution), and patient collections (clear estimate/statement delivery and payment reconciliation). There are several places where things tend to fall apart:

- Work moves by tickets or messages, so people wait for each other.

- Systems that don’t talk to each other result in duplicate data entry.

- Inconsistent documentation of visits (key fields missing in encounter notes).

- Variations in insurer rules (formats, medical necessity, frequency limits, required modifiers).

- Brittle interfaces or mappings between EHR, clearinghouse, and payer portals.

According to Becker’s Hospital Review, up to 86% of denials are potentially avoidable. Most failures, however, have a single root: no one owns the rules or the data model end-to-end.

When rules live in vendor UIs and schemas/mappings are scattered, a tiny companion-guide change can silently break submissions. Denials recur, FPY drops, and no one can answer who changed what or why.

The first step to solve these problems is to establish a clear source of truth for each entity (patient, coverage, encounter, charges, claims). If you can always show when a claim changed, which rule changed it, and why, revenue cycle optimization becomes a form of engineering.

Establish a solid baseline for optimizing

Teams often rush into automation without trustworthy baselines. Stand up a minimal metrics layer first. With a week or two of data measurement, you’ll see where cash is stuck and which specialties or payers create the most variance. That’s where your first wins live.

| KPI | Definition | Target/Guardrail | Primary data source |

| First-Pass Yield (FPY) | % of claims paid on first submission | 90–95%+ | 837/835 match on trace IDs |

| Denial rate (avoidable) | % of claims denied for preventable reasons | ≤ 5–8% | 835 + normalized reason taxonomy |

| Days in A/R | Avg days from submission to payment | –10–20 vs baseline | Billing ledger + 835 |

| Clean-claim rate | % of claims that pass internal scrubber | ≥ 90–92% | Scrubber logs |

| Manual touches/claim | Average touches from coding to payment | –15–30% vs baseline | WFM + workqueue logs |

| Patient-pay yield | % collected of patient responsibility | +15–25% vs baseline | Statements + gateway |

| Cost-to-collect | Total RCM cost / total collections | 2–6% | GL + collections |

Build where it matters, buy the rest

You will not out-build a mature clearinghouse or a payment processor. The aim is to own the parts that make you different: payer rules, mappings, and the patient financial experience aligned to your care model.

Pick vendors that allow export of raw X12 and FHIR bulk data, expose webhooks or event streams, and don’t trap your rules behind a proprietary UI. If a vendor makes it painful to version rules outside their product, you’re renting your revenue cycle. Non-negotiable requirements to retain flexibility and prevent vendor lock include:

- Bulk export of raw 837/835/277 and 270/271/278 with traceability.

- Rule API or file-based sync to external Git repo; reversible import/export.

- Webhooks/event stream for submission status and remits.

- Schema/companion-guide change notices ≥14 days before enforcement.

- BAA + clear data residency/retention + tamper-evident audit logs.

| Criterion | Weight | Buy A | Buy B | Build | Notes |

| Raw data export (X12/FHIR bulk, webhooks) | 5 | Must include 837/835/277 payloads + trace IDs | |||

| Rules portability (version in Git, API access) | 5 | No lock-in to GUI editors | |||

| Companion‑guide flexibility | 5 | Custom segments/loops supported | |||

| Roadmap control (SLAs, change notice cadence) | 4 | Required for schema drift mitigation | |||

| Integration burden (EHR/PM fit) | 4 | Native FHIR + X12 adapters preferred | |||

| Security/Compliance (BAA, SOC 2, PHI in non‑prod) | 4 | Strict test‑data policy | |||

| Total cost of ownership (3 yrs) | 4 | Include hidden fees (per‑transaction, storage) | |||

| Specialty fit (edits, auth workflows) | 3 | Behavioral health/Imaging/Telehealth nuances | |||

| Observability (logs, metrics, SLOs) | 3 | Exportable, not dashboards only | |||

| Team capability/risk | 3 | Talent to own rules/mappers |

Use a reliable event-driven architecture

Once you decide what to own, design the rails that make ownership practical. The architecture that supports is event-driven.

- EHR/PM system (source of truth for encounters & charges).

- Integration layer; queue/stream (Kafka/EventBridge) for events.

- Eligibility & coverage discovery API(s); prior authorization orchestration; claim scrubber; rules engine; remittance parser; denials workqueue.

- Patient financial experience: estimates, SMS/email billing, payment plans, wallets.

As claims travel through several systems (EHR/PM, a clearinghouse, the payer), each step is recorded as a small, timestamped “event.” That allows us to safely retry when a portal or API is flaky, link the 837 / 277 / 835 messages with the same tracking ID, and maintain a tamper-evident audit trail.

This design pays off operationally. Engineering can deploy rule updates in hours with safety rails. RCM ops sees where a claim stalled and why. Compliance can answer, “Who changed this rule and on what evidence?” without a fire drill. And when portals wobble, you can degrade gracefully instead of halting cash.

#1 Clearinghouse and data-format strategy

Your clearinghouse is both a dependency and a failure domain. Treat formats and companion guides as code, not PDFs. This means keeping a living matrix of companion-guide differences by payer and clearinghouse. Introduce a schema registry for X12 and FHIR, and enforce contract tests for 837, 835, and 277.

Every mapper and transform validator should be a part of continuous integration. This way, interface brittleness shows up in pull requests instead of in delayed cash.

Before any change goes live, run a quick contract test against real payer samples to confirm the format still matches. Ship all rule and mapping changes through version control (Git) with peer review, automated checks, and an easy rollback if rejections spike. And when a payer updates its companion guide, the change pipeline should block the release until tests and mappings are updated.

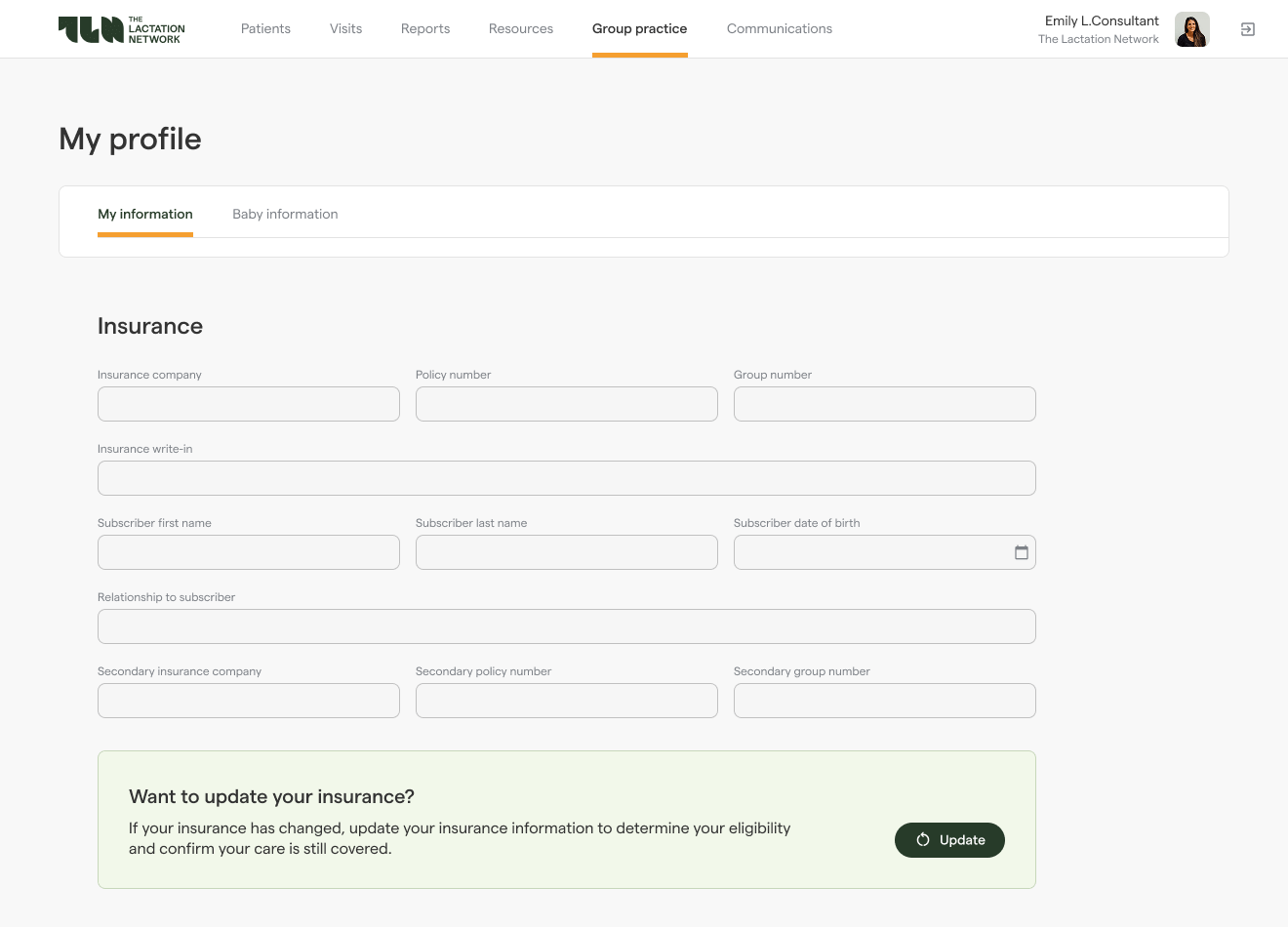

#2 Clinical and billing workflow alignment

Most preventable denials originate upstream. The goal is to align provider documentation with coding needs and make gaps visible early. Codable data should be captured once and flow downstream cleanly. Use shared templates that enforce required fields (POS, modifiers, referring NPI, auth ID) and surface payer-specific prompts at the point of care.

Show NCCI/LCD/NCD checks and modifier prompts while the provider writes a note, not after. Preserve charge-capture lineage by mapping orders and schedules to charges, with timestamps and authorship so you can explain every line.

Finally, keep a tight feedback loop. A gold standard is a 48-hour coder-provider review where recurring issues are fixed upstream in templates, rules, or mappings (not handled as one-off tips).

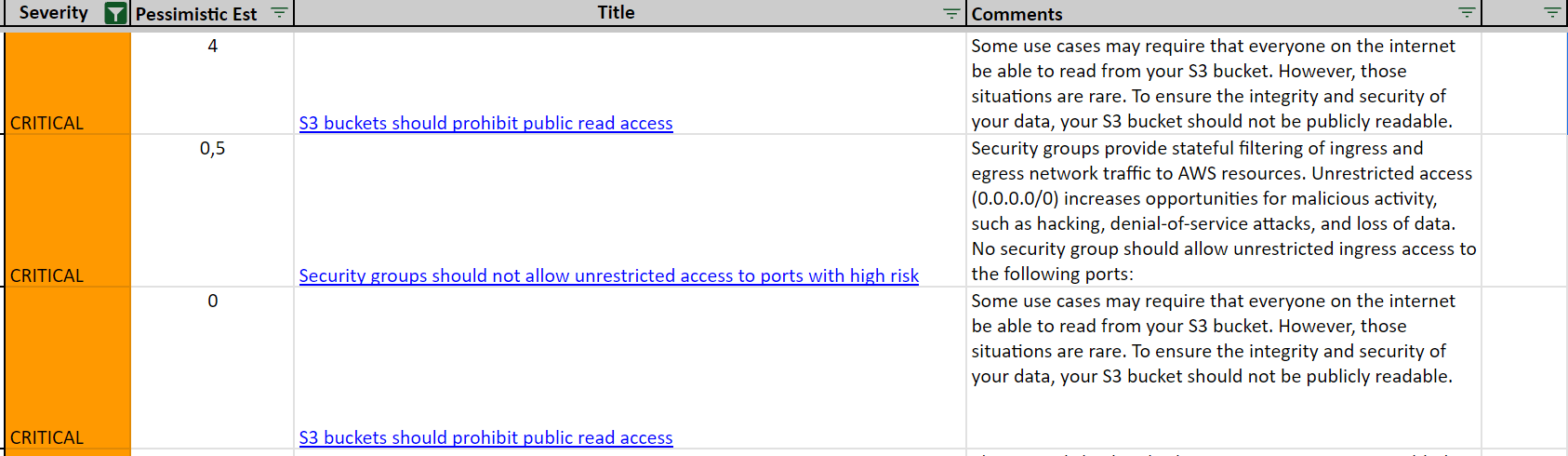

#3 Security & compliance

Security and compliance are first-order design constraints. HIPAA/SOC 2 guardrails include least-privilege IAM, SSO/MFA, device posture, and DLP, encryption in transit and at rest with managed KMS.

PHI in non-prod should be prohibited by default. Instead, use masked/synthetic data with a CI/CD pipeline that automatically blocks PHI artifacts in builds and logs.

Tampe-evident audit trails are another must-have. Append-only logs for rule changes, submissions, and remits. Secrets should have centralized management, automated rotations, quarterly access reviews, and break-glass procedures.

And don’t forget to sign a BAAs with every third party to decrease vendor risk.

Check out our AWS+Terraform guide on HIPAA compliance for implementation details.

Now that we’ve detailed the event-driven architecture, it’s time to look at specific RCM optimizations.

Prevent denials pre-visit by shifting checks left

The cheapest denial is the one you never file. Eligibility, benefits, and prior-authorization checks should ideally happen days before the visit. This includes automation of 270/271 sweeps and detection of auth-triggering combinations of CPT, diagnosis, payer, and place of service. Encounter records should store the authorization ID and required documentation links so that they flow into downstream edits and appeals.

Whenever a patient books an appointment, the system should capture structured fields, including referring NPI, POS, and expected modifiers. Each discrepancy avoided before the visit reduces denials later on.

Prior authorization is where many otherwise clean claims stall. It becomes predictable, auditable, and fast when treated as an orchestrated flow:

- 278 API (where available): submit request/response cycles with correlation IDs; store determinations on the encounter; poll or subscribe to status updates.

- Portal workflow (when no API exists): pre-assemble the packet (problem list, notes, imaging reports), use RPA sparingly as a last resort, and capture screenshots/PDFs and portal reference numbers for audit purposes.

Packets vary by specialty, but the core is consistent: demographics and coverage, referring NPI, clear clinical indications, prior imaging/labs, and proof of conservative therapy when required. Normalizing attachment taxonomies (e.g., PWK segments) speeds both submissions and appeals.

Commit to simple, visible targets for SLAs (submit by T-3 days, follow up by T-1 day). An alert should be triggered when there’s no response within the expected window or when a denial cites missing documentation. Every denial due to missing documents feeds a change upstream (template or rule) to ensure the next request is complete.

Even with clear SLAs and alerts, prior auth can fail in a few predictable ways. Here’s how to prevent them:

- Wrong POS or modifier: correct combination should be suggested inline during scheduling.

- Authorization number missing: claims that require AuthID should be blocked from submission until the ID is present on the encounter.

- Portal changed its fields: schema drift should trigger an alert for switching to a manual fallback while a mapper is updated via PR.

For a more technical overview of prior authentication, check our guide on patient API implementation with Azure Health Data Services.

Capture clean, codable data at the source

Accurate coding results from well-designed encounter notes with:

- Provider templates with required fields that are impossible to miss (POS, modifiers, referring NPI, and AuthID when needed).

- Inline prompts to tell what’s missing or unsupported for a chosen code.

- Real-time checks (NCCI edits, LCD/NCD rules, and modifier suggestions) that keep clinicians and coders aligned.

- Automated charge capture from orders and schedules, timestamped charge lineage for every line.

Showing where a charge originated, who added it, when, and based on which docs, makes it easier to defend the charge to payers.

Turn payer rules into code for claim scrubbing

Payers differ in their formatting, medical necessity requirements, bundling policies, frequency limits, and companion guide details.

It’s possible to externalize those rules into a versioned engine, simplifying claim scrubbing and submission. Store rules and mappings in Git, run unit and contract tests against representative 837, 835, and 277 samples, and block merges when schema drift is detected. When edits are risky, test them on a subset of traffic and roll back automatically if rejections spike.

Submission artifacts, including payloads, acknowledgments, rejection details, and clearinghouse trace identifiers, should be maintained as a single source of truth for audits and root-cause analyses.

Eliminate denials at the root

Denials shouldn’t be treated as isolated workqueue items. A better approach is to route them by reason and fix type, prioritize by recoverability and value, and close the loop upstream. For example, a missing auth ID denial should trigger a change to the encounter template and a rule that enforces it. Likewise, a formatting rejection should change the mapper and companion-guide test.

| Denial reason | Typical root cause | Upstream fix | Owner | SLA to implement | Recurrence half-life target |

| Auth missing/invalid | Auth not obtained or not persisted | Add scheduling rule + encounter field; hard-block submission w/o AuthID | Product + Eng | 5 business days | < 2 weeks |

| Invalid modifier/POS | Template mismatch; payer nuance | Update template + rules table (CPT×DX×POS×payer) | Clinical Ops + Eng | 3 business days | < 2 weeks |

| Formatting/segment error | Mapper drift vs companion guide | Update mapper + contract test; vendor notice tracking | Eng | 2 business days | < 1 week |

| Coverage inactive | Eligibility check late or stale | Batch sweeps; pre-service re-verify; COB workflow | RCM Ops | 3 business days | < 3 weeks |

| Documentation insufficient | Missing indications/attachments | Update packet checklist; inline prompts; attachment taxonomy | Clinical Ops | 7 business days | < 3 weeks |

The key is to track recurrence by root cause and measure its half-life. If it’s not shrinking, you’re firefighting, not improving.

Start with rules, add AI where it makes sense

Deterministic rules are still the bread-and-butter of healthcare revenue cycle optimization. According to the 2025 State of Claims report, only 14% of healthcare professionals use AI to reduce denials, while 67% are confident AI can help with the claims process. Currently, it makes sense to utilize AI/ML when outcomes vary significantly and trustworthy labels are available.

The most valuable use cases include prediction, ranking, and triage tasks:

- Denial prediction & routing: score claim lines for likelihood of denial and route to the right queue. Inputs: payer, CPT×DX×POS, historical edits, provider, site. Success: fewer avoidable denials, higher overturn rate, precision@top-K triage.

- Propensity-to-pay segmentation: tailor statements, reminders, and payment plans. Inputs: balance size, prior behavior, payer/plan, demographics. Success: patient-pay yield growth without increasing the complaint rate.

- Missed-charge detection: flag encounters where documentation implies a billable service was omitted. Inputs: orders, note features, schedule/charge deltas. Success: incremental compliant revenue with a low false-positive rate.

- Prior-auth likelihood / next-best-action: estimate if auth is required and which documentation moves approval faster. Success: shorter auth turnaround; fewer “missing doc” denials.

- Workqueue triage ETA: predict time-to-resolve and auto-prioritize high-value/quick-win items. Success: touches/claim reduction and cash acceleration.

Generative AI has a more limited usage in healthcare RCM optimization. However, it can help generate appeal narratives from clinical notes and denial reasons, explain edit rationales to providers in plain language, suggest documentation gaps, summarize payer policy changes into rule-change candidates, and similar.

- Human-in-the-loop by design: appeals and patient communications should require review; risky edits gated by approval.

- No black-box steps on the critical path: every model output that affects money flow requires evidence (including features used, score, and threshold) and an audit record.

- Respect data boundaries: minimize PHI, prefer de-identified features, and avoid sending raw notes off-platform.

- Shadow mode: deploy models in shadow mode, compare vs. baseline rules, then ramp by cohort.

- Confidence threshold: below threshold, fall back to rules or human handling.

- Monitoring & drift: track calibration, precision/recall by payer/specialty, and feature drift; auto-open incidents on KPI regressions.

To sum it up, ML boosts throughput where labeled data exists, while GenAI helps teams communicate and explain. Payer rules, schema conformance, and audit trail should remain the system of record.

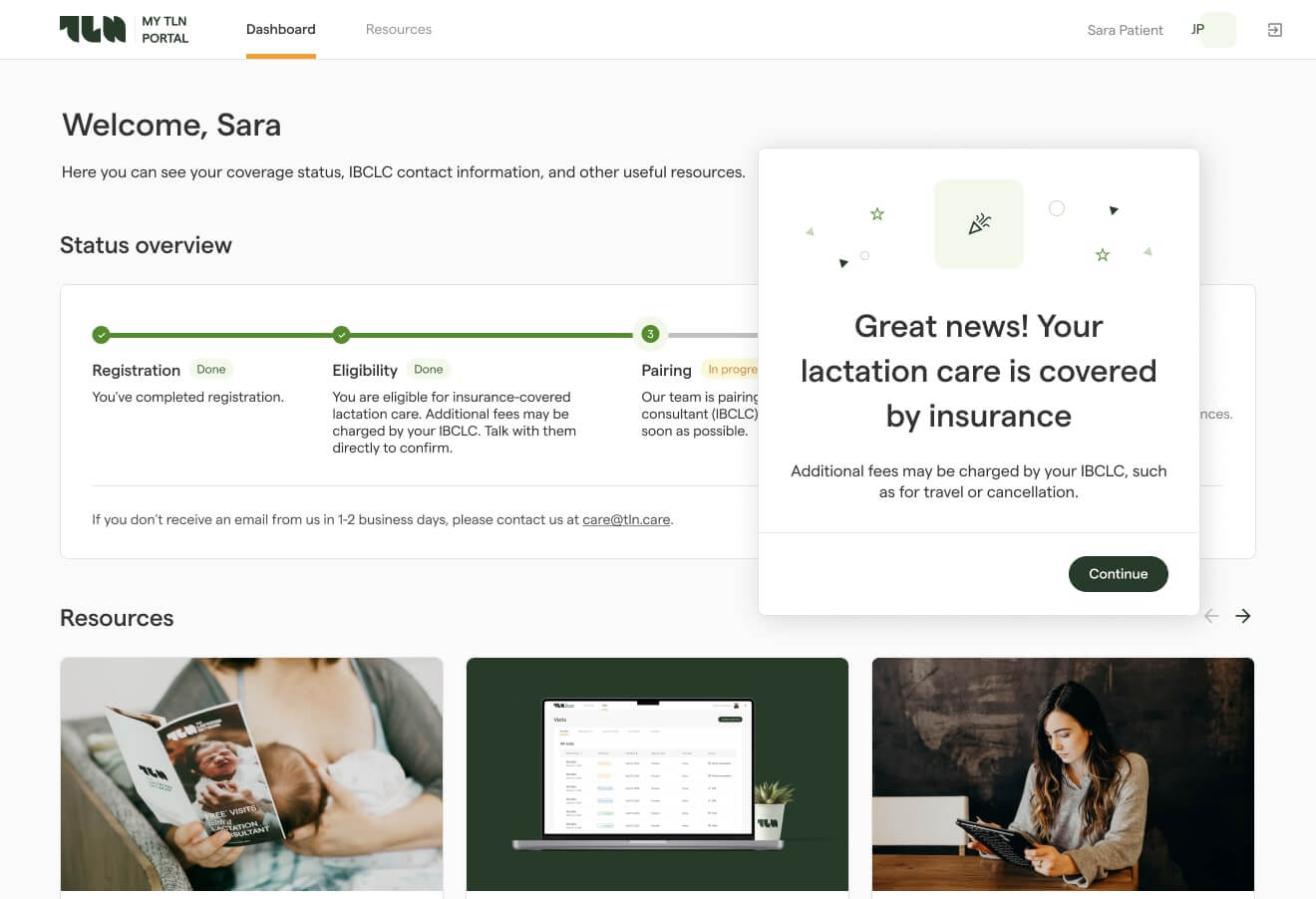

Accelerate patient-pay with UX optimization

Patients pay faster when it’s effortless and the numbers make sense. The last bit of RCM optimization is directly related to this experience:

- One-click pay: tokenized/expiring payment links, QR codes on statements, saved wallets, and automatic retries for soft-declines.

- Clear statements: itemized charges tied to encounters, plain-language descriptions, insurance adjustments, and a visible “dispute or ask a question” action.

- Compliance & consent: TCPA/FDCPA; capture and log consent/opt-outs, include STOP keywords, and retain an audit trail.

- Reconciliation: payment gateway integrated with ledger; clean handling of refunds/chargebacks with exceptions in a queue.

Outcomes vary by specialty, but teams that implement this approach typically gain several points of first-pass yield, cut avoidable denials by double digits, reduce days in A/R by ten to twenty percent, lower manual touches per claim, increase patient-pay yield, and shave meaningfully off the cost to collect.

Read our guide on healthcare UX design for more recommendations

Conclusion

A reliable, audit-ready revenue cycle platform is a product capability, not a back-office afterthought. This means owning your rules and data, standardizing the formats that connect you to payers, and making every denial a code change.

With those three things done, your CFO receives faster cash, clinicians have fewer billing headaches, and the compliance team obtains the necessary evidence for an audit.

From an engineering perspective, healthcare revenue cycle optimization requires FHIR/X12 integrations, rules-as-code, schema registries, CI gates, and tamper-evident audit trails. The things that MindK excels at. If you need a pragmatic build-vs-buy plan or want a stress test of your current setup, just get in touch with our team.